Projekte

Hier werden verschiedene Projekte vorgestellt, die im Rahmen des RoboCup bearbeitet werden oder fertiggestellt wurden. Zum Teil enthalten sind Projekte der Vorlesung "Image Understanding" sowie des Wahlfachs "Interactive mobile Robots".

Hier finden Sie die Publikationen von Professor Dr. rer. nat Matthias Rätsch.

Ganz aktuell können Sie hier unsere Open Source Programme herunterladen.

SCITOS

QR-Code/OCR control and speech output

Reading QR-code and OCR.

Using text-to-speech (TTS) software for all outputs, like reaching the goal, as well as error messages or the input from QR-code or OCR.

- 16SS:

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Implement a number detection for the floor number in the lift.

Here you find a short video.

Here you find a summary.

Here you find the documentation. - Use of commercial Ivona TTS

Here you find a short video.

Here you find a summary.

Here you find the documentation. - Localication based on QR code and OCR

Leonie reads QR-codes and interacts with the user.

Here you find a short video.

Here you find a summary. - Use of open source software Mary TTS

Hier you find a short video.

Hier you find a summary. - Using OpenCV for OCR

Here you find the documentation.

Using a 3D sensor to detect body gestures.

- Gesture detection for SCITOS

With help of KineticSpace (©Professor Wölfel) gestures are learned, detected and recognized and the Scitos reacts to it.

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Theoretical study to the use of KineticSpace (©Professor Wölfel)

Here you find a summary.

Here you find the documentation. - Waving detection with MSKinect

Detecting primitive gestures like waving to trigger SCITOS actions, using the MSKinect.

Here you find a summary.

Here you find the documentation. - Gesture detection for SCITOS

With help of KineticSpace (©Professor Wölfel) gestures are learned, detected and recognized and the Scitos reacts to it.

Here you find a summary.

Here you find a short video.

Here you find the documentation (for the PW please contact Felix Ostertag) - Gesture detection

Using the software Kinetic Space gestures are learned and can be recognized afterwards.

Here you find a summary.

Here you find a short video.

Here you find the documentation (for the PW please contact Felix Ostertag)

Leonie recognizes people and looks at them.

In project 1 the microphone array from the MS Kinect is used to detect where the sound comes from. Leonie reacts to whistling for example.

In project 2 the FaceVacs SDK from Cognitec is used to find the faces.

Project 1:

Hiere you find a short video.

Here you find a summary.

Here you find the documentation.

Project 2:

Here you find a short video.

Here you find a summary.

Here you find the documentation.

Grundlagen:

Trade Show Robot: Robot presents facial recognition and analysis at trade fairs

Using the Cognitec SDK, the basis of a trade show robot was created. In addition to a guide for the installation and use of the SDK an example of the dialogue as well as a first example program was developed.

Here you will find the resulting package.

Here you will find the short summary.

Detection of human emotions and facial expressions.

- 16WS: Emotion detection with SVM

Here you find a summary.

Here you find a short video.

Here you find the documentation. - 16SS: Emotion detection with CNN

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Evaluation of Kinect 2 for emotion detection compared to Kinect 1

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Emotion detection with a neural network

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Emotion detection based on MSKinect and faceshift SDK

Emotion analysis using a Microsoft Kinect Sensor and an C++ application that receives data from faceshift's network stream.

With faceshift, 48 action units of the face can be tracked and measured instead of 6 with Kinect SDK.

Here you find a summary.

Here and here you find a short video. - Emotion detection with MSKinect

Here you find the documentation.

Here you find a summary.

(COG Drittmittelprojekt)

Based on an active camera system persons are detected and then tracked via tracking-algorithms.

- 16SS: Compress and merg of existing stuff and demo zoom cam player

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Dual-Cam-System-Tracking

On a wide-angle camera a person is tracked, while the PTU is used to fokus a high-res camera on the person.

Here you find a summary.

Here you find a short video.

SCITOS is following a person, including turning and driving backwards, maintaining a constant distance .

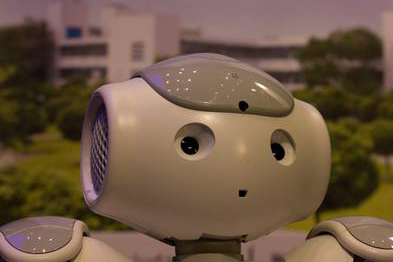

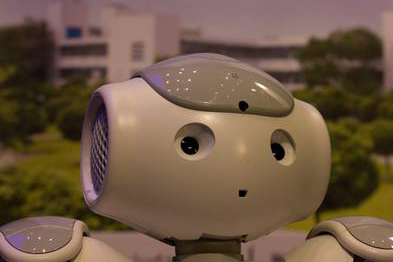

NAO

The NAO recognizes persons by their faces.

- Improvement of the software to recognize multiple persons on one picture at ones.

Here you find a short video.

Here you find a summary.

Here you find the documentation.

- Using the FaceVACs SDK from Cognitec, faces are learned and can then be used to recognize persons.

Here you find a short video.

Here you find a summary.

Here you find the documentation.

Symptoms of old age arise also in robots. In this case, arthritis in the shoulder and mental overloading.

Unfortunatly there is wear and tear in both Naos Kurt and Bert. Shoulder joints cracking and function is threaten to stop working soon. The same happend in the neck gears, and like them, the shoulders should get new replacement parts. These also need to be designed and made from scratch.

While working, the processors in the heads tend to overheat. This should be taken care of by using better cooling fans.

Both of our Naos have a broken neck - finally the operation is successful!

By a fall, both Naos have suffered a broken neck. The plastic gears have not withstood the strain. Through the commitment of RT-Lion Sasha Brown several thousand € were saved on repair costs. Now a machined aluminum gear supports the center the mind of Kurt and Bert!

Becoming acquainted with the graphical programming interface choreographer as well as the simulation environment Webots.

To protect the real Nao robot and to test programs virtually the simulator in the choreographer or the external simulation tool Webots can be used.

Advantages and disadvantages of both options were captured and documented, as well as their application.

Here you find the documentation.

MixedReality

MR-SmallTable–„Find the Bot“

Use of pole filters to improve the image capturing.

To improve the detection of the position marker on the MR table a holder was designed for a polarizing filter. The polarizing filter is to filter out the display on the screen, to thereby detect the marker against a black (for cameras) background easily.

Amazingly, the detection rate has not improved, but actually worsened. Probably because light reflections now have a greater impact on the black background. The accuracy of location detection has, however, improved significantly.

Here you find the documentation.

Defective IR interface makes mixed reality a virtual Reality

Because for some time the IR interface, which makes the connection between bots and server was broken, our mixed reality was limited to pure virtual games.

After many attempts, a working prototype has now been finally created and tested!

Sonstiges

A foosball table with an autonomously playing goalie.

Instructional video here.

16WS:

Here you find a summary.

Here you find a short video.

Here you find the documentation from.

16SS:

Here you find a summary.

Here you find the documentation from.

15WS:

Here you find a summary.

Here you find a short video.

Here you find the documentation from.

15SS:

Here you find a summary.

Here you find a short video.

Here you find the documentation from.

14WS:

Here you find a summary.

Here you find the documentation from.

13WS:

Here you find a summary.

Here you find the documentation.

Here you find collaborations with the robotics lab of Prof. Gruhler

15WS: Kuka robot web presentation

Here you find a summary.

Here you find a short video.

Here you find the documentation from.

15WS: MANZ Delta Robot

Here you find a summary.

Here you find a short video.

Here you find the documentation from.

15SS: Jigsaw Puzzle Robot

Here you find a summary.

Here you find a short video.

Here you find the documentation from.

Detecting hand gestures for establishing non-verbal communication.

- CNN for Finger Alphabet

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Keyboard simulation and Finger Alphabet

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Counting fingers with a webcam.

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Hand gestures with Leap Motion, simulating a keyboard as an example

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Hand gestures with Leap Motion

Controlling the SCITOS using hand gestures with Leap Motion.

Here you find a summary.

Here you find a short video. - Leap Motion talk

Here you find a summary.

Here you find a short video.

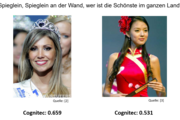

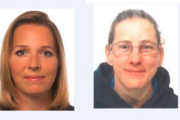

Data collection and statistics for attractiveness of females within the course "computer science engineer" in the mechanical engineering program. Please know: so ein Algorithmus könnte dazu eingesetzt werden, Diskriminierung bei größeren Bewerbungsprozessen mit hauptsächlich weiblichen Bewerbungen zu erkennen.

Here you find a summary. Bitte beachten Sie, dass so ein Algorithmus dazu eingesetzt werden könnte, Diskriminierung bei größeren Bewerbungsprozessen mit hauptsächlich weiblichen Bewerbungen zu erkennen.

Unterlagen finden Sie hier.